While working at the Robohub, other students and I needed a way to track the locations of objects for various tasks. For my project in particular, it was also going to be useful to know the location of several points on an object, all based on some detected position. I created this framework to be able to track multiple objects, based on multiple tracking systems, and points of interest on each object.

This framework serves as a:

- Wrapper around ROS tf code, particularly transform listeners

- Wrapper around the transforms between points on an object

- Combination of tracking systems through a plugin setup

Usage

This framework works by aggregating the results of other object detection platforms. A plugin for listening to detected Aruco markers has been developed as an example.

In code, the user can define an object. They can then define the details and location of markers placed on the object (ex: Aruco or Vicon markers). When messages containing detected markers are received, they are matched with those markers known to the framework. The detected pose of the markers are used to calculate the object’s pose, and this is used to calculate the poses of points of interest.

Demonstration

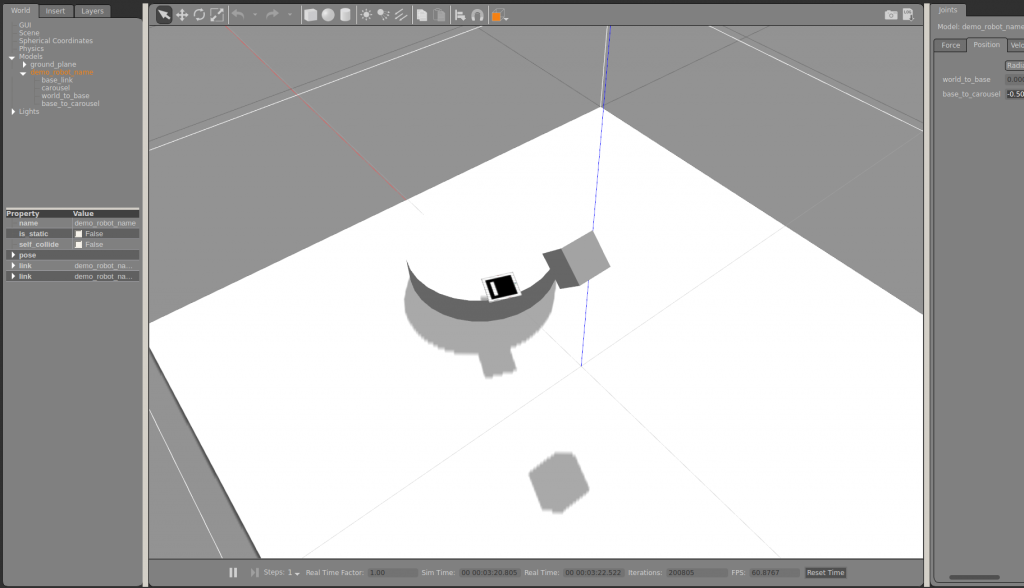

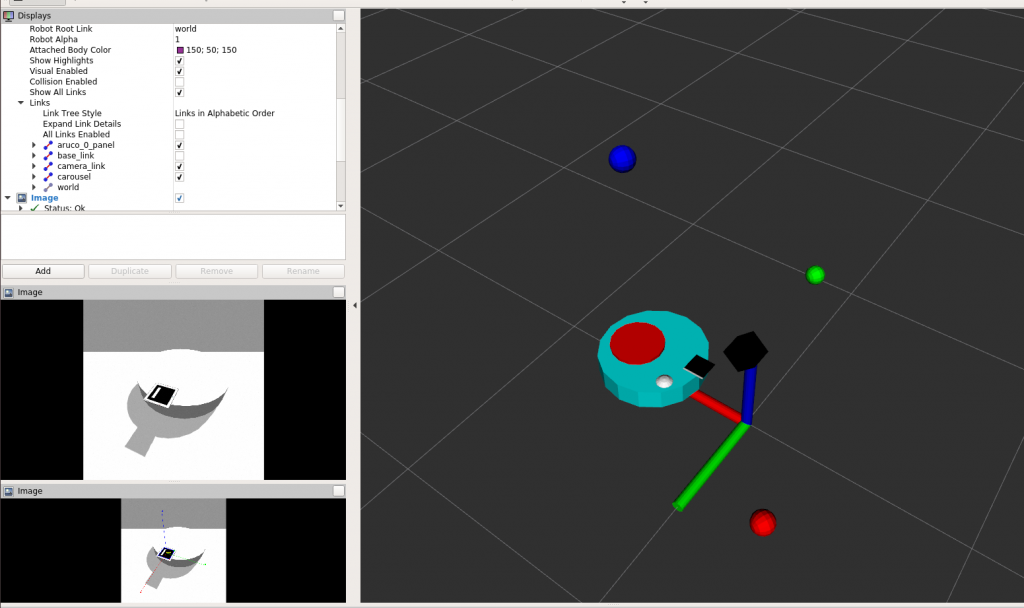

I’ve created a demonstration using a robot in Gazebo (outputs are shown in Rviz). The robot consists of a base link, and a spinning ‘carousel’ link (blue) with an Aruco tag placed on it. The system is used to track an object representing the carousel link (red), using the known placement of the Aruco tag (white sphere), detected using a simulated camera (black box). I have also defined points of interest along the axes of the object (red/green/blue spheres), which spin as the tracked object does. As opposed to manually calculating their position with respect to the object’s current rotation, a user can use code analogous to object.get_position(“red_axis_point”) and expect and updated position/orientation.

(I haven’t found out yet why the Aruco marker does not move when viewed in Rviz)

Future Work

While the current system has worked well for my project, and others’, it has some important limitations. After the bulk of my thesis work is done, I hope to revisit it to develop:

- Formalize the transformations used (“translation between X and Y in the frame of Z”)

- EKF-based motion models (constant-position, constant-velocity)

- Filtering/combination of multiple tracked markers on each object

- Custom modifications to object position readings (ex: “this object should lie flat on the table surface”)

- Separating out the demonstration code, to decouple dependencies

Feedback

“The object tracking framework served my development needs elegantly. I integrated it with ease into a project which needed various tracking capabilities using ArUco markers. The framework is useful because of its implementation of query points which makes tracking specific locations on objects a breeze. After a discussion on some new features for the framework, Wesley was able to add them within days, a testament to his well-structured approach to software development!”

– Sagar Rajendran, MASc Student, UW